structured query log что это

Структурное логирование на примере Serilog и Seq

Обычные лог-записи состоят из строк и для поиска записи среди массива текста нам приходится использовать регулярные выражение

Структурное логирование хранит записи в виде объектов (структур). Например, с помощью JSON

Serilog

Теперь можно передавать объект logger через Dipendency Injection, или сразу его использовать.

Допустим мы создаем программу для диагностики автомобиля и нам необходима информация о производителе, серии и дате впуска. И конечно, чтобы все хранилось в структурном виде.

Поскольку мы использовали вывод в консоль и файл, то и результат будет в виде привычной строки.

Одной из удобных особенностей является добавка часто используемых свойств. Для web приложения это может быть сессия пользователя, посещаемый URL, данные браузера. В более общем случае можно добавить версию приложения. Сделать это можно с помощью свойства Enrich и уже встроенных классов, или написать свой.

Для удобного вывода в консоль или текстовый файл сложных объектов лучше подсказать шаблону Serilog о том, что используем именно сложный объект, а не примитивный тип, добавив символ @. Иначе будет выводится typeof(MyClass).ToSting().

Приложение Seq приходит на помощь для удобного хранения и поиска структурных логов.

Работает Seq в виде windows сервиса, который принимает REST-запросы, а внутри хранит данные в NoSql БД.

После установки Seq необходимо также добавить в приложение Serilog.Sinks.Seq Nuget пакет. А далее подружить наш Serilog с Seq.

Теперь можно удобно делать поиск по нашим полям, включая сравнение чисел и дат

Параметры поиска можно сохранять и использовать в других частях приложения. Добавляем Environment в Dashboard

А можно добавить real-time Dashboard отображение ошибок, пришедших именно от приложения «MyApp», версии «1.2.1» и произошедших в методе «Repository.GetUserByIdAndPassword()».

Бизнес требования

Журналы (logs) в MySQL

В MySQL на данный момент существуют 4 вида журнала (лога) и при достаточно серьёзной работе с базами на MySQL необходимо за ними следить. Например, бинарный лог у нас за сутки набирает около гигабайта, а размер жёсткого диска на сервере ограничен и за ними надо следить. Однако следить следует не только за бинарным логом, так как логи (журналы) в MySQL могут принести немалую пользу.

Итак, какие логи ведёт MySQL? Это:

1. бинарный лог (binary log)

2. лог ошибок (error log)

3. лог медленный запросов (slow query log)

4. лог запросов (general query log)

5. лог репликаций (relay log)

Каждый из них по-своему полезен.

Бинарный лог

Содержание бинарного лога можно посмотреть с помощью утилиты mysqlbinlog.

Основные настройки в my.cnf

Местоположение лога:

log_bin = /var/log/mysql/mysql-bin.log

Максимальный размер, минимум 4096 байт, по умолчанию 1073741824 байт (1 гигабайт):

max_binlog_size= 500M

Сколько дней хранится:

expire_logs_days = 3

Наиболее часто использующиеся команды

Удаление логов до определённого файла:

PURGE BINARY LOGS TO ‘mysql-bin.000’;

Удаление логов до определённой даты:

PURGE BINARY LOGS BEFORE ‘YYYY-MM-DD hh:mm:ss’;

Лог ошибок

Особенно полезен в случаях сбоев. Лог содержит информацию об остановках, запусках сервера, а также сообщения о критических ошибках. Может содержать сообщения с предупреждениями (warnings).

Основные настройки в my.cnf

Местоположение лога:

log_error = /var/log/mysql/mysql.err

Флаг, указывающий стоит ли записывать в лог в том числе предупреждения (записываются, если значение больше нуля):

log_warnings = 1

Наиболее часто использующиеся команды

Переход к новому файл лога:

shell> mysqladmin flush-logs

Копирование старой части лога (необходимо, так как в случае повторного выполнения fluch он будет удалён):

shell> mv host_name.err-old backup-directory

Лог медленных запросов

Если есть подозрение, что приложение работает медленно из-за неэффективных запросов к базе, то в первую очередь следует проверить лог медленных запросов. В случае оптимизации запросов этот лог поможет выяснить, что необходимо оптимизировать в первую очередь.

Основные настройки в my.cnf

Местоположение лога:

log_slow_queries = /var/log/mysql/mysql_slow.log

Со скольки секунд выполнения запрос считается медленным, минимальное значений — 1 секунда, по умолчанию 10 секунд:

long_query_time = 10

Если надо логировать запросы, которые не используют индексы, надо добавить строку:

log-queries-not-using-indexes

Лог запросов

Лог содержит информацию о подключениях и отключениях клиентов, а также все SQL запросы, которые были получены. Фактически, это временный лог. Обычно лог удаляется автоматически сразу после выполнения всех команд (т.е. как только он стал ненужным). Лог ведётся в соответствии с очередность поступления запросов. Этот лог содержит все запросы к базе данных (независимо от приложений и пользователей). Так что если есть желание (или необходимость) проанализировать, какие необходимы индексы, какие запросы могли бы оптимизированы, то этот лог как раз может помочь в таких целях. Лог полезен не только для случаев, когда необходимо знать, какие запросы выполняются с базой данных, но и в случаях, когда ясно, что возникла ошибка с базой данных, но неизвестно, какой запрос был отправлен к базе данных (например, в случае генерации динамического SQL-а). Рекомендуется защищать лог запросов паролем, так как он может данные также о паролях пользователей.

Основные настройки в my.cnf

Местоположение лога:

log = /var/log/mysql/mysql.log

Наиболее часто использующиеся команды

В отличии от других логов, перезагрузка сервера и команда fluch не инициирует создание нового лога. Но это можно сделать вручную:

shell> mv host_name.log host_name-old.log

shell> mysqladmin flush-logs

shell> mv host_name-old.log backup-directory

Лог репликаций

Здесь логируются изменения, выполненные по инициации сервера репликаций. Как и бинарный лог, состоит из файлов, каждый из которых пронумерован.

Основные настройки в my.cnf

Местоположение лога:

relay-log = /var/log/mysql/mysql-relay-bin.log

Максимальный размер:

max_relay_log_size = 500М

Наиболее часто использующиеся команды

Начать новый файл лога можно только при остановленном дополнительном (slave) сервере:

shell> cat new_relay_log_name.index >> old_relay_log_name.index

shell> mv old_relay_log_name.index new_relay_log_name.index

Команда fluch logs инициирует ротацию лога.

3 простых шага по исправлению ошибок STRUCTUREDQUERY.LOG

Файл structuredquery.log из Unknown Company является частью unknown Product. structuredquery.log, расположенный в c: \Users \salem \AppData \Local \Temp \ с размером файла 707.00 байт, версия файла Unknown Version, подпись E9F7A753B309882DBA32FE4FEF19E72F.

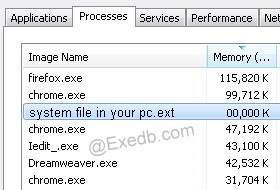

В вашей системе запущено много процессов, которые потребляют ресурсы процессора и памяти. Некоторые из этих процессов, кажется, являются вредоносными файлами, атакующими ваш компьютер.

Чтобы исправить критические ошибки structuredquery.log,скачайте программу Asmwsoft PC Optimizer и установите ее на своем компьютере

1- Очистите мусорные файлы, чтобы исправить structuredquery.log, которое перестало работать из-за ошибки.

2- Очистите реестр, чтобы исправить structuredquery.log, которое перестало работать из-за ошибки.

3- Настройка Windows для исправления критических ошибок structuredquery.log:

Всего голосов ( 183 ), 116 говорят, что не будут удалять, а 67 говорят, что удалят его с компьютера.

Как вы поступите с файлом structuredquery.log?

Некоторые сообщения об ошибках, которые вы можете получить в связи с structuredquery.log файлом

(structuredquery.log) столкнулся с проблемой и должен быть закрыт. Просим прощения за неудобство.

(structuredquery.log) перестал работать.

structuredquery.log. Эта программа не отвечает.

(structuredquery.log) — Ошибка приложения: the instruction at 0xXXXXXX referenced memory error, the memory could not be read. Нажмитие OK, чтобы завершить программу.

(structuredquery.log) не является ошибкой действительного windows-приложения.

(structuredquery.log) отсутствует или не обнаружен.

STRUCTUREDQUERY.LOG

Проверьте процессы, запущенные на вашем ПК, используя базу данных онлайн-безопасности. Можно использовать любой тип сканирования для проверки вашего ПК на вирусы, трояны, шпионские и другие вредоносные программы.

процессов:

Cookies help us deliver our services. By using our services, you agree to our use of cookies.

Saving Time with Structured Logging

Logging is the ultimate resource for investigating incidents and learning about what is happening within your application. Every application has logs of some type.

Often, however, those logs are messy and it takes a lot of effort to analyze them. In this article, we’re going to look at how we can make use of structured logging to greatly increase the value of our logs.

We’ll go through some very hands-on tips on what to do to improve the value of an application’s log data and use Logz.io as a logging platform to query the logs.

This article is accompanied by a working code example on GitHub.

What are Structured Logs?

“Normal” logs are unstructured. They usually contain a message string:

This message contains all the information that we want to have when we’re investigating an incident or analyzing an issue:

All the information is in that log message, but it’s hard to query for this information! Since all the information is in a single string, this string has to be parsed and searched if we want to get specific information out of our logs.

If we want to view only the logs of a specific logger, for example, the log server would have to parse all the log messages, check them for a certain pattern that identifies the logger, and then filter the log messages according to the desired logger.

Structured logs contain the same information but in, well, structured form instead of an unstructured string. Often, structured logs are presented in JSON:

This JSON structure allows log servers to efficiently store and, more importantly, retrieve the logs.

But the value of structured logs doesn’t end here: we can add any custom fields to our structured log events that we wish! We can add contextual information that can help us identify issues, or we can add metrics to the logs.

With all the data that we now have at our fingertips we can create powerful log queries and dashboards and we’ll find the information we need even when we’ve just been woken up in the middle of a night to investigate an incident.

Let’s now look into a few use cases that show the power of structured logging.

Add a Code Path to All Log Events

The first thing we’re going to look at is code paths. Each application usually has a couple of different paths that incoming requests can take through the application. Consider this diagram:

This example has (at least) three different code paths that an incoming request can take:

Each of these code paths can have different characteristics. The domain service is involved in all three code paths. During an incident that involves an error in the domain service, it will help greatly to know which code path has led to the error!

If we didn’t know the code path, we’d be tempted to make guesses during an incident investigation that lead nowhere.

So, we should add the code path to the logs! Here’s how we can do this with Spring Boot.

Adding the Code Path for Incoming Web Requests

In Java, the SLF4J logging library provides the MDC class (Message Diagnostic Context). This class allows us to add custom fields to all log events that are emitted in the same thread.

To add a custom field for each incoming web request, we need to build an interceptor that adds the codePath field at the start of each request, before our web controller code is even executed.

We can do this by implementing the HandlerInterceptor interface:

Depending on the application, the logic might be vastly different here, of course, this is just an example.

In the postHandle() method we shouldn’t forget to call MDC.remove() to remove all previously set fields again because otherwise, the thread would still keep those fields, even when it goes back to a thread pool, and the next request served by that thread might still have those fields set to the wrong values.

To activate the interceptor, we need to add it to the InterceptorRegistry :

That’s it. All log events that are emitted in the thread of an incoming log event now have the codePath field.

If any request creates and starts a child thread, make sure to call MDC.put() at the start of the new thread’s life, as well.

Check out the log querying section to see how we can use the code path in log queries.

Adding the Code Path in a Scheduled Job

In Spring Boot, we can easily create scheduled jobs by using the @Scheduled and @EnableScheduling annotations.

To add the code path to the logs, we need to make sure to call MDC.put() as the first thing in the scheduled method:

To make the logs from a scheduled job even more valuable, we could add additional fields:

With these fields in the logs, we can query the log server for a lot of useful information!

Add a User ID to User-Initiated Log Events

The bulk of work in a typical web application is done in web requests that come from a user’s browser and trigger a thread in the application that creates a response for the browser.

Imagine some error happened and the stack trace in the logs reveals that it has something to do with a specific user configuration. But we don’t know which user the request was coming from!

To alleviate this, it’s immensely helpful to have some kind of user ID in all log events that have been triggered by a user.

Since we know that incoming web requests are mostly coming directly from a user’s browser, we can add the username field in the same LoggingInterceptor that we’ve created to add the codePath field:

Every log event emitted from the thread serving the request will now contain the username field with the name of the user.

With that field, we can now filter the logs for requests of specific users. If a user reports an issue, we can filter the logs for their name and reduce the logs we have to sight immensely.

Depending on regulations, you might want to log a more opaque user ID instead of the user name.

Check out the log querying section to see how we can use the user ID to query logs.

Add a Root Cause to Error Log Events

When there is an error in our application, we usually log a stack trace. The stack trace helps us to identify the root cause of the error. Without the stack trace, we wouldn’t know which code was responsible for the error!

But stack traces are very unwieldy if we want to run statistics on the errors in our application. Say we want to know how many errors our application logs in total each day and how many of those are caused by which root cause exception. We’d have to export all stack traces from the logs and do some manual filtering magic on them to get an answer to that question!

If we add the custom field rootCause to each error log event, however, we can filter the log events by that field and then create a histogram or a pie chart of the different root causes in the UI of the log server without even exporting the data.

A way of doing this in Spring Boot is to create an @ExceptionHandler :

That’s it. All the log events that print a stack trace will now have a field rootCause and we can filter by this field to learn about the error distribution in our application.

Check out the log querying section to see how we can create a chart with the error distribution of our application.

Add a Trace ID to all Log Events

If we’re running more than one service, for example in a microservice environment, things can quickly get complicated when analyzing an error. One service calls another, which calls another service and it’s very hard (if at all possible) to trace an error in one service to an error in another service.

A trace ID helps to connect log events in one service and log events in another service:

In the example diagram above, Service 1 is called and generates the trace ID “1234”. It then calls Services 2 and 3, propagating the same trace ID to them, so that they can add the same trace ID to their log events, making it possible to connect log events across all services by searching for a specific trace ID.

For each outgoing request, Service 1 also creates a unique “span ID”. While a trace spans the whole request/response cycle of Service 1, a span only spans the request/response cycle between one service and another.

We could implement a tracing mechanism like this ourselves, but there are tracing standards and tools that use these standards to integrate into tracing systems like Logz.io’s distributed tracing feature.

So, we’ll stick to using a standard tool for this. In the Spring Boot world, this is Spring Cloud Sleuth, which we can add to our application by simply adding it to our pom.xml :

This automatically adds trace and span IDs to our logs and propagates them from one service to the next via request headers when using supported HTTP clients. You can read more about Spring Cloud Sleuth in the article “Tracing in Distributed Systems with Spring Cloud Sleuth”.

Add Durations of Certain Code Paths

The total duration our application requires to answer a request is an important metric. If it’s too slow users are getting frustrated.

Usually, it’s a good idea to expose the request duration as a metric and create dashboards that show histograms and percentiles of the request duration so that we know the health of our application at a glance and maybe even get alerted when a certain threshold is breached.

We’re not looking at the dashboards all the time, however, and we might be interested not only in the total request duration but in the duration of certain code paths. When analyzing logs to investigate an issue, it can be an important clue to know how long a certain path in the code took to execute.

In Java, we might do something like this:

This log event will now have the field thirdPartyCallDuration which we can filter and search for in our logs. We might, for example, search for instances where this call took extra long. Then, we could use the user ID or trace ID, which we also have as fields on the log event to figure out a pattern when this takes especially long.

Check out the log querying section to see how we can filter for long queries using Logz.io.

Querying Structured Logs in Logz.io

If we have set up logging to Logz.io like described in the article about per-environment logging, we can now query the logs in the Kibana UI provided by Logz.io.

Error Distribution

We can, for example, query for all log events that have a value in the rootCause field:

This will bring up a list of error events that have a root cause.

We can also create a Visualization in the Logz.io UI to show the distribution of errors in a given time frame:

Error Distribution Across a Code Path

Say, for example, that users are complaining that scheduled jobs aren’t working correctly. If we have added a job_status field to the scheduled method code, we can filter the logs by those jobs that have failed:

To get a more high-level view, we can create another pie chart visualization that shows the distribution of job_status and rootCause :

We can now see that the majority of our scheduled jobs is failing! We should add some alerting around this! We can also see which exceptions are the root causes of the most scheduled jobs and start to investigate.

Checking for a User’s Errors

Or, let’s say that the user with the username “user” has raised a support request specifying a rough date and time when it happened. We can filter the logs using the query username: user to only show the logs for that user and can quickly zero in on the cause of the user’s issue.

We can also extend the query to show only log events of that user that have a rootCause to directly learn about what went wrong when.

Structure Your Logs

This article showed just a few examples of how we can add structure to our log events and make use of that structure while querying the logs. Anything that should later be searchable in the logs should be a custom field in the log events. The fields that make sense to add to the log events highly depend on the application we’re building, so make sure to think about what information would help you to analyze the logs when you’re writing code.

You can find the code samples discussed in this article on GitHub.

Follow me on Twitter for more tips on how to become a better software developer.

Tom Hombergs

As a professional software engineer, consultant, architect, and general problem solver, I’ve been practicing the software craft for more than ten years and I’m still learning something new every day. I love sharing the things I learned, so you (and future me) can get a head start.

Grow as a Software Engineer in Just 5 Minutes a Week

Join more than 4,000 software engineers who get a free weekly email with hacks to become more productive and grow as a software engineer. Also get 50% off my software architecture book, if you want.

Have a look at the previous newsletters to see what’s coming.

Structured Query Language (SQL)

What is Structured Query Language (SQL)?

Structured Query Language (SQL) is a specialized programming language designed for interacting with a database. SQL allows us to perform three main tasks:

Building Blocks of SQL

Modern SQL consists of three major types of query languages. Each of the languages corresponds to a function or task described above. All three combine to form a fully functioning language that allows a user to perform all possible functions on a relational database.

Commonly Used SQL Statements

The following is a list of commonly used SQL commands that can be used to create tables, insert data, change the structure of the tables, and query the data.

Defining and Creating Tables

CREATE

The CREATE statement is used to create tables in a database. The statement can define the field names and field data types within a table. The CREATE statement is also used to define the unique identities of the table using primary key constraints. It is also used to describe the relationships between tables by defining a foreign key.

Template:

CREATE TABLE [tableName] (

ALTER

The ALTER statement is used to change the structure of a table in the database. The statement can be used to create a new column or change the data type of an existing column.

Template:

ALTER TABLE [tableName]

ADD Column_1, Datatype_1

DROP

The DROP statement is used to delete a table from a database. It must be used with caution as deletion is irreversible.

Template:

DROP TABLE [tableName]

Adding, Modifying, and Deleting Data

INSERT

The INSERT statement is used to add records or rows to a table. The name of the table to insert records into is defined first. Then, the column names within the table are defined. Finally, the values of the records are defined to insert into these columns.

Template:

INSERT INTO [tableName] (Field_1,…,Field_N)

UPDATE

The UPDATE statement is used to modify records in a table. The statement changes the values of a specified subset of records held in the defined columns of the table. It is a good practice to filter rows using a WHERE clause when updating records. Otherwise, all records will be altered by the UPDATE statement.

Template:

SET Column_1 = Value_1, …, Column_N = Value_N

WHERE [filter citeria]

DELETE

The DELETE statement is used to delete rows from a table based on criteria defined using a WHERE clause. The statement should be used carefully, as all deletion in a database is permanent. If a mistake is made using a DELETE statement, the database will need to be restored from a backup.

Template:

DELETE FROM [tableName]

WHERE [filter criteria]

Extracting and Analyzing Data

SELECT

The SELECT statement is one of the most used statements in SQL. It is used to select rows from one or more tables in a database. A SELECT statement is usually used with a WHERE clause to return a subset of records based on a user-defined criterion. The SELECT statement is used to conduct most data analysis tasks as it allows the user to extract and transform the desired records from a database.

Template:

For specific columns:

SELECT Column_1, …, Column_K FROM [tableName]

WHERE [filter criteria]

SELECT * FROM [tableName]

WHERE [filter criteria]

Building a Financial Database

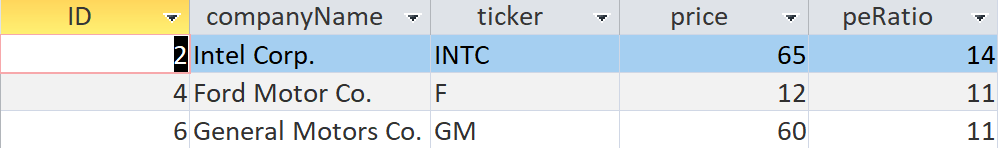

In the following example, we will use some of the SQL statements discussed above to create a financial database with pricing and fundamental data. Microsoft Access is an accessible tool that can be used to build relational databases within the Microsoft Office ecosystem.

First, we use the CREATE statement to define the structure of the table. Our table will have the following columns and data types:

We can follow the template described above to write the CREATE statement. The following statement creates the table:

CREATE TABLE priceTable (

Next, we insert some records into the table using the INSERT statement. Following are two examples of inserting rows in our table.

INSERT INTO priceTable ( ID, [companyName], [ticker], [price], [peRatio])

VALUES (5, “Walmart”, “WMT”, 138, 26);

INSERT INTO priceTable ( ID, [companyName], [ticker], [price], [peRatio])

VALUES (6, “General Motors Co.”, “GM”, 60 11);

Suppose we want to analyze the stocks in the database. We want to analyze the best value stocks that are available. We can define value stocks as those with a price-to-earnings ratio Price Earnings Ratio The Price Earnings Ratio (P/E Ratio is the relationship between a company’s stock price and earnings per share. It provides a better sense of the value of a company. of less than twenty. It is accomplished using a SELECT statement with a WHERE clause as shown below:

SELECT * FROM priceTable WHERE peRatio

A Short History of SQL

Structured Query Language (SQL) was first introduced in a paper from 1970, “A Relational Model of Data for Large Shared Data Banks,” by Edgar F. Codd. Codd introduced relational algebra, which is used to define relationships between data tables. It is the theoretical foundation of SQL. The first implementation of SQL was developed by two researchers at IBM: Donald Chamberlin and Raymond Boyce.

Additional Resources

Learn more about Structured Query Language through CFI’s SQL Fundamentals course. CFI is the official provider of the Business Intelligence & Data Analyst (BIDA)® Become a Certified Business Intelligence & Data Analyst (BIDA)™ From Power BI to SQL & Machine Learning, CFI’s Business Intelligence Certification (BIDA) will help you master your analytical superpowers. certification program, designed to transform anyone into a world-class financial analyst.

To keep learning and developing your knowledge, we highly recommend the additional resources below: